The previous blog post highlighted the importance of Latent Variables in Bayesian modelling. Let’s now walk through different aspects of machine learning and see how Bayesian methods can help us in designing the solutions. We will also talk about the additional capabilities and insights we can get through this method.

Probabilistic Clustering

Clustering is the method of splitting the data into separate chunks based on the inherent properties of the data. When we use the word ‘probabilistic’, it implies that each point in the given data is a part of every cluster, but with some probability.

The maximum probability cluster is the one that defines the point. Now some of the things we need to understand here are -

• How each cluster will be defined probabilistically?

• How many clusters will be formed?

Answer to these above 2 questions will help us define each point in the data as a probabilistic part of each cluster. So, let us first formulate the solutions to these questions.

How each cluster will be defined probabilistically?

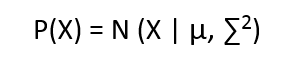

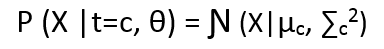

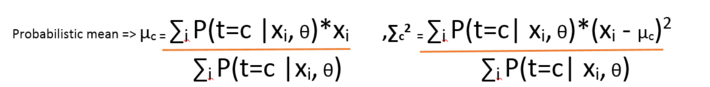

We define each cluster to have come from a Gaussian distribution, having mean = µ and standard deviation = ∑, and the equation looks like

How many clusters will be formed?

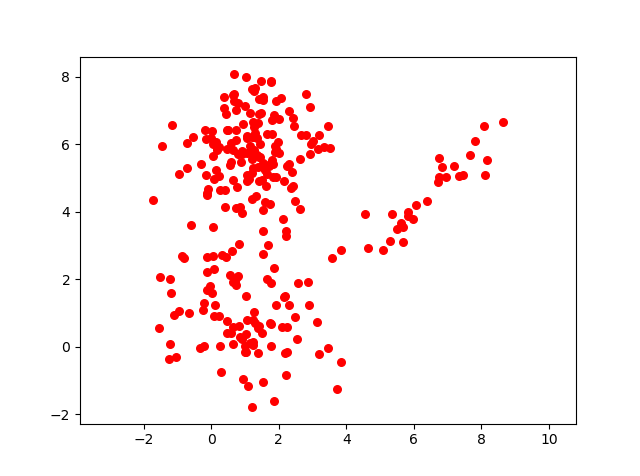

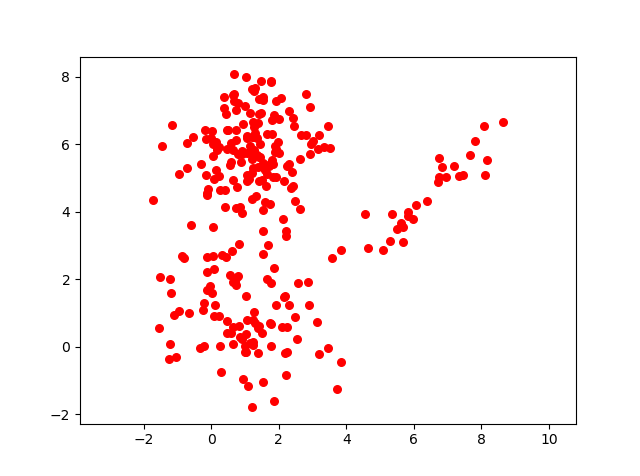

Let us take a sample data set visual :

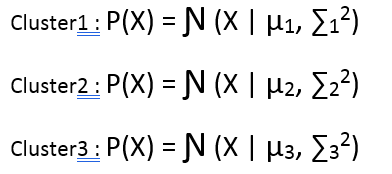

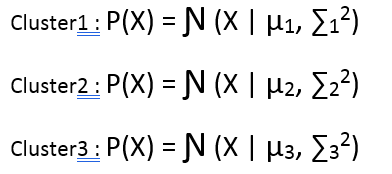

Upon close observation, one can see that there are 3 clusters in it. So, lets define these 3 clusters probabilistically.

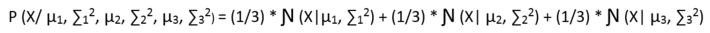

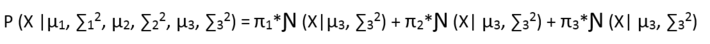

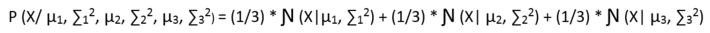

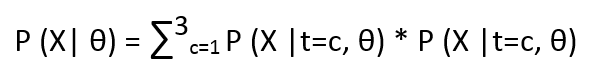

Now, let us try to write an equation which can define the probability of a point in the data to be a part of every cluster. But before that, we need a mechanism or a probability function that can define which cluster a point can be from and with what probability. Let us say that a point can be a part of any cluster with equal probability of 1/3. Then the probabilistic distribution of a point would look like:

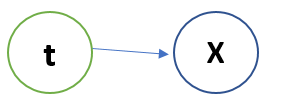

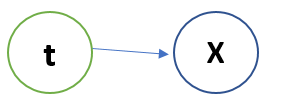

But in the above case, it is an assumption that every point can belong to any cluster with equal probability. But is that correct? No! So, let’s use a Latent Variable which knows the distribution of every point belonging to every cluster. The Bayesian model looks like:

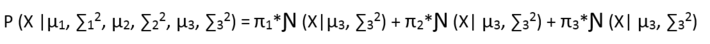

The above model states that Latent Variable t knows, to which cluster the point x belongs and with what probability. So, if we re-write the above equation, it looks like -

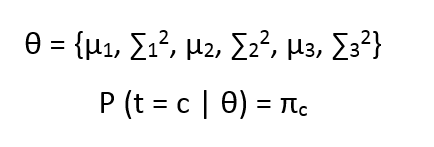

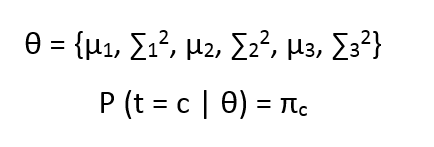

Now let us call all our parameters as

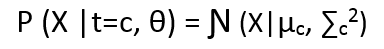

the above equation states that the probability by which the Latent variable takes value c, given all the parameters is πc

The above equation states that probability of a point sampled from cluster c, given that it has come from cluster c is Ɲ (X|µc, ∑c2).

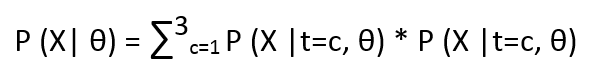

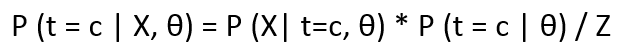

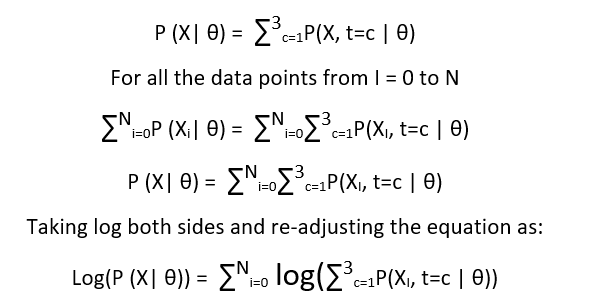

Now we know that P(X, t=c | θ) = P(X| t=c, θ) * P(t=c | θ) from our Bayesian model. We can marginalize t to compute

The above equation is exactly same as our original one.

Fact Checks

• In the equation P (t = c | θ) = πc, there is no condition over the data points x, and thus it can be considered as Prior distribution and as a hard classifier.

• Marginalization was done on t, to have the exact expression on LHS as was chosen originally. Therefore, t remains latent and unobserved.

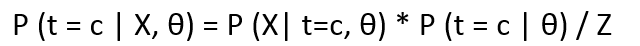

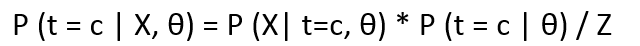

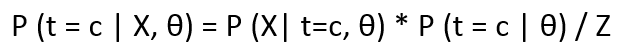

• if we want the probability of a point belonging to a specific cluster, given the parameters θ and the point x, then the expression looks like:

Where LHS is the posterior distribution of t w.r.t X and θ. Z is the normalization constant and can be calculated as :

The Chicken-Egg Problem in Probabilistic Clustering

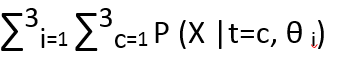

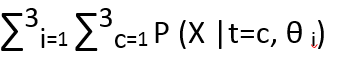

Now we are clear that in the Bayesian model we made between t and x, when we say the latent variable knows the cluster number of every point, we say it probabilistically. This means the formulae applied is the posterior distribution, as we discussed in Fact check section. Now the problem is, to compute θ, we should know t and to compute t, we should know θ. We will see this in the following equations:

And the posterior t is computed using

The above expression clearly shows that means and standard deviations can be computed if we know the source t and vice-versa.

The next post will show how to solve the above problem using Expectation Maximization algorithm.

Expectation – Maximization Algorithm

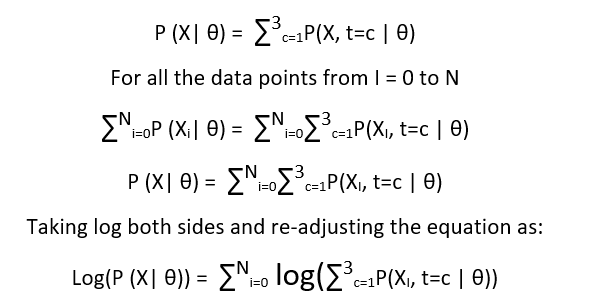

From our previous blog discussion, we know that marginalized posterior distribution looks like -

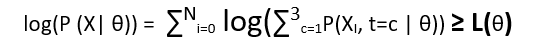

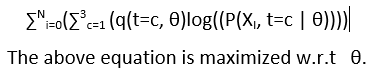

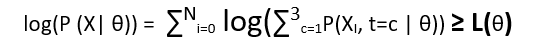

Now we need to maximize the above expression w.r.t θ. The problem here is that maximization of the above expression is not simple, as function usually has many local maxima. We can use gradient – decent here but it will make the process long. To solve this, we use a different approach - we will try and construct a lower bound of the above expression using Jensen’s inequality. And once we build our lower bound, we will try and maximize it.

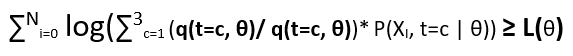

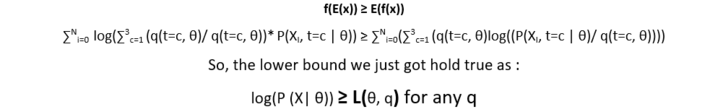

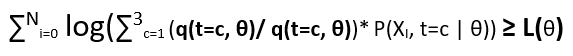

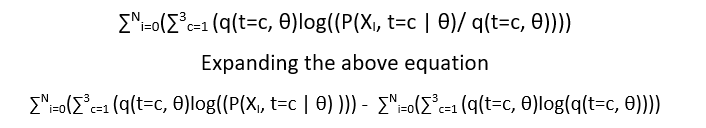

The lower bound is also called variational lower bound and we are applying Jensen’s inequality to compute the lower bound. Consider the log as our function f and let us introduce a new function q(t=c, θ), and multiply it in denominator and numerator. So the new equation looks like:

Now consider the ratio (P(XI, t=c | θ)/ q(t=c, θ)) as random variable and q(t=c, θ) as the probabilistic coefficient as ∑3c=1 q(t=c, θ) = 1.

Now from Jensen’s inequality, we know that:

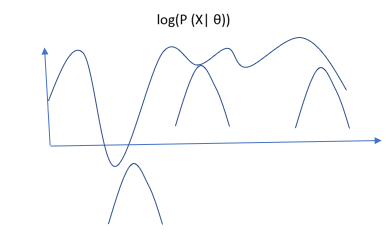

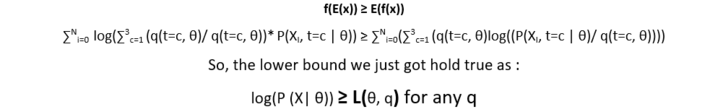

Graphically,

All the above small curves are the derived from lower bound L(θ, q).

Expectation Step

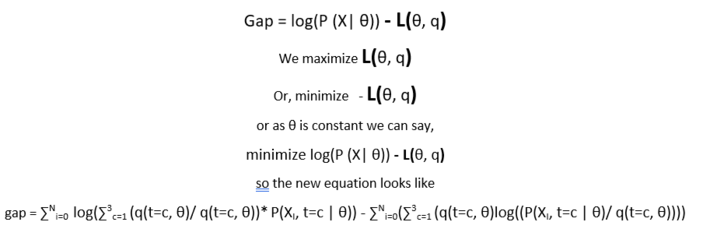

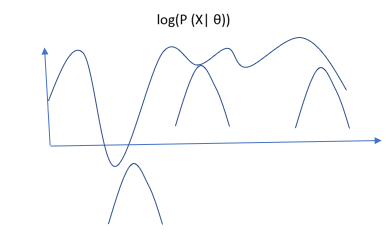

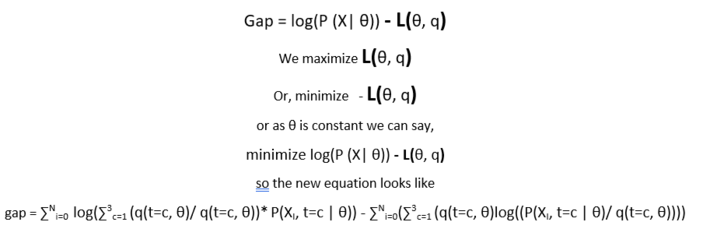

It is clear that the lower bound will always be beneath the actual function, and we need to maximize the lower bound to be as much closer to actual function as possible. In expectation step we fix our θ and try to maximize the lower bound. We do it by reducing the gap between actual function and the lower bound. The equation is as follows :

putting in some linear algebra, the equation ends up in the expression of KL divergence between q and posterior t

The solution of expectation step is :

q(t=c) = P(t=c | Xi ., θ)

Maximization step

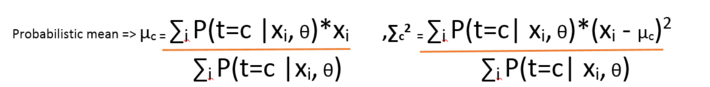

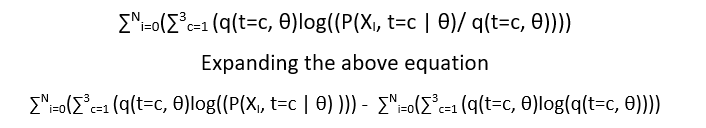

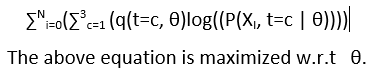

Once we get the gap reduced between the variational lower function L(θ, q) and log(P(X |θ)), we maximize our lower bound function w.r.t θ.

The second component of above equation is constant w.r.t θ. Therefore, we drop it, the new equation remains is:

In the next post we will continue our discussion and introduce Gaussian mixture model. We will see how to implement Expectation – Maximization algorithm to perform clustering.

This point of view article originally published on datasciencecentral.com. Data Science Central is the industry's online resource for data practitioners. From Statistics to Analytics to Machine Learning to AI, Data Science Central provides a community experience that includes a rich editorial platform, social interaction, forum-based support, plus the latest information on technology, tools, trends, and careers.

Click here for the article