AI enthusiasm is moderated by governance concerns

The modern field of Artificial Intelligence (AI), which began in 1956, has witnessed both intense interest and skepticism over the years, with much happening since its theoritical formulation. While traditional AI, in the form of predictive models has been around for a while, the field of AI has received a fresh injeciton of interest with the emergence of large language models (LLMs).

Buoyed by the potential impact of these LLMs, businesses worldwide have shown a strong interest in exploring how they can integrate this new AI technology into their business. While the upside of LLMs is significant, there also exist some challenges around compliance and safety.

In this blog we will explore how enterprises can embark on their AI journey responsibly.

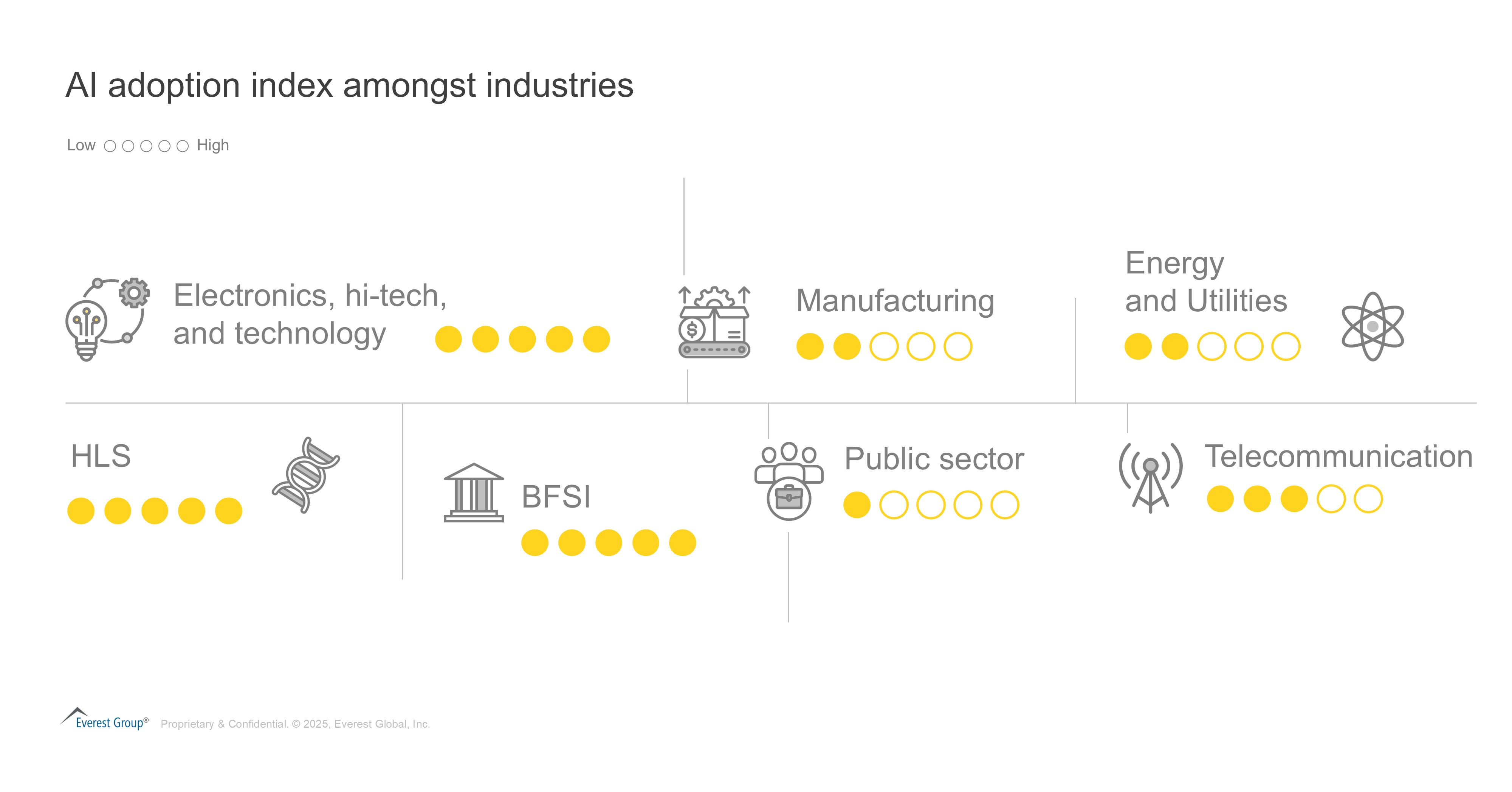

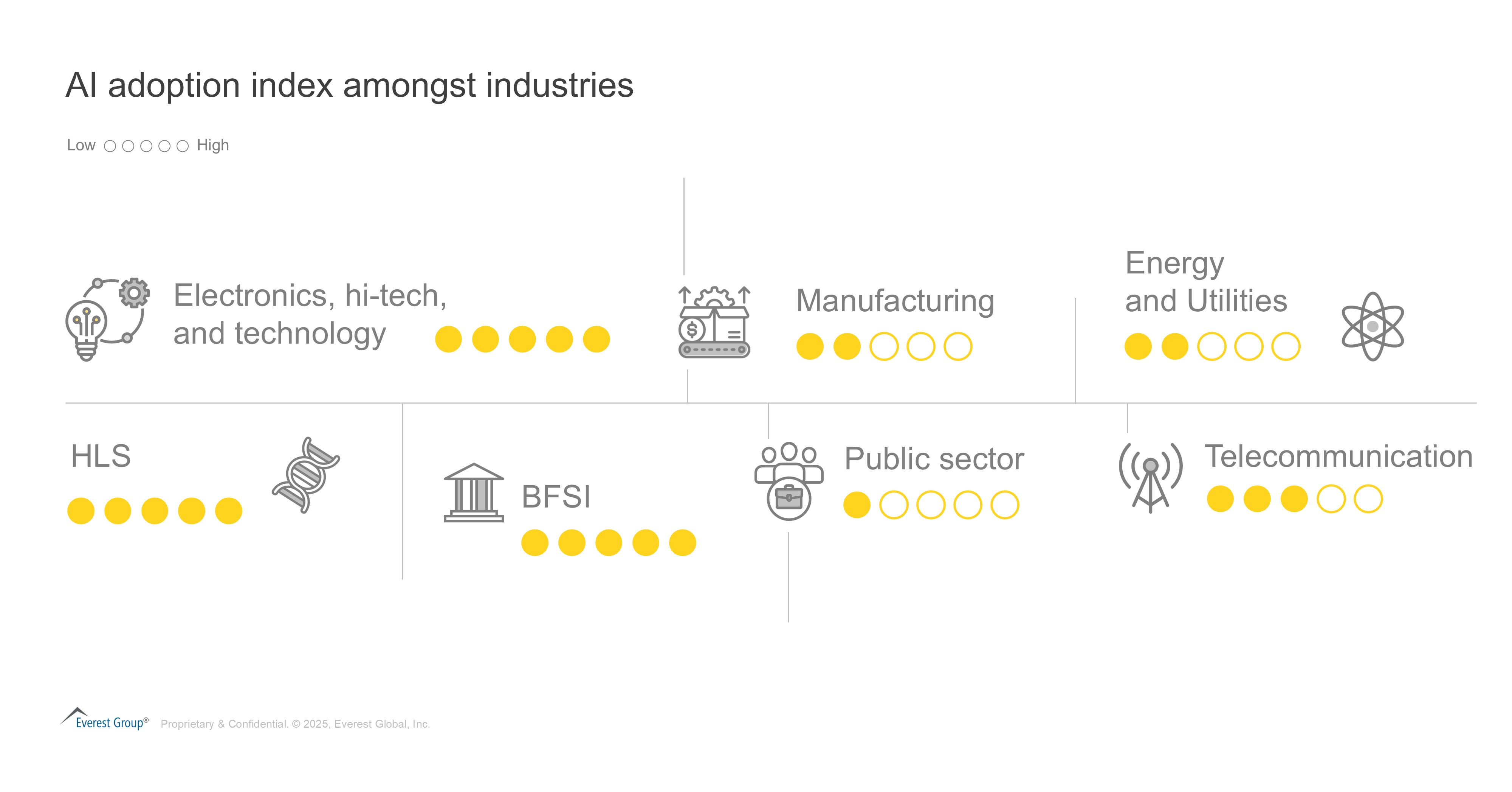

Exhibit 1 gives us an overview of the level of AI adoption across industries. However, the adoption story has been underpinned by pressing concerns related to data privacy, ethical usage, legal and regulatory compliances, and transparency. These concerns have compelled organizations to look closely at AI governance and in particular the ethical aspects of AI adoption and usage. Enterprises are looking to understand how they can evolve their existing governance and compliance practices to address new and emerging challenges from Generative AI (gen AI) adoption.

Responsible AI and AI governance are not the same

In the world of AI, Responsible AI (RAI) and AI governance are two terminologies which are often used interchangeably by organizations to explain how AI systems are governed, managed and scaled. However, there exists a nuanced difference between these two terms. RAI is concerned with developing a strategy for designing, developing and deploying AI systems that are fair, transparent, accountable, and aligned with organizational values. For example, Microsoft exemplifies RAI through its AI Ethics and Effects in Engineering and Research (AETHER) committee, which advises leadership on ethical AI development and implementation.

AI governance, in contrast, is more oriented toward operationalizing RAI principles. It encompasses frameworks, policies, guardrails and processes established by organizations to oversee and regulate AI systems, ensuring their compliance with laws, standards, and ethical guidelines. Following the previous example, Microsoft has implemented Responsible AI Standard, a framework which provides detailed processes for managing AI risks, ensuring compliance, and enforcing accountability.

RAI is looked at by organizations from the onset of AI adoption, in order to set the guidelines and policies to ensure AI systems are ethically compliant with organizational standards. AI governance on the other hand adheres to these policies and guidelines when developing guardrails, frameworks, policies, and oversight mechanisms.

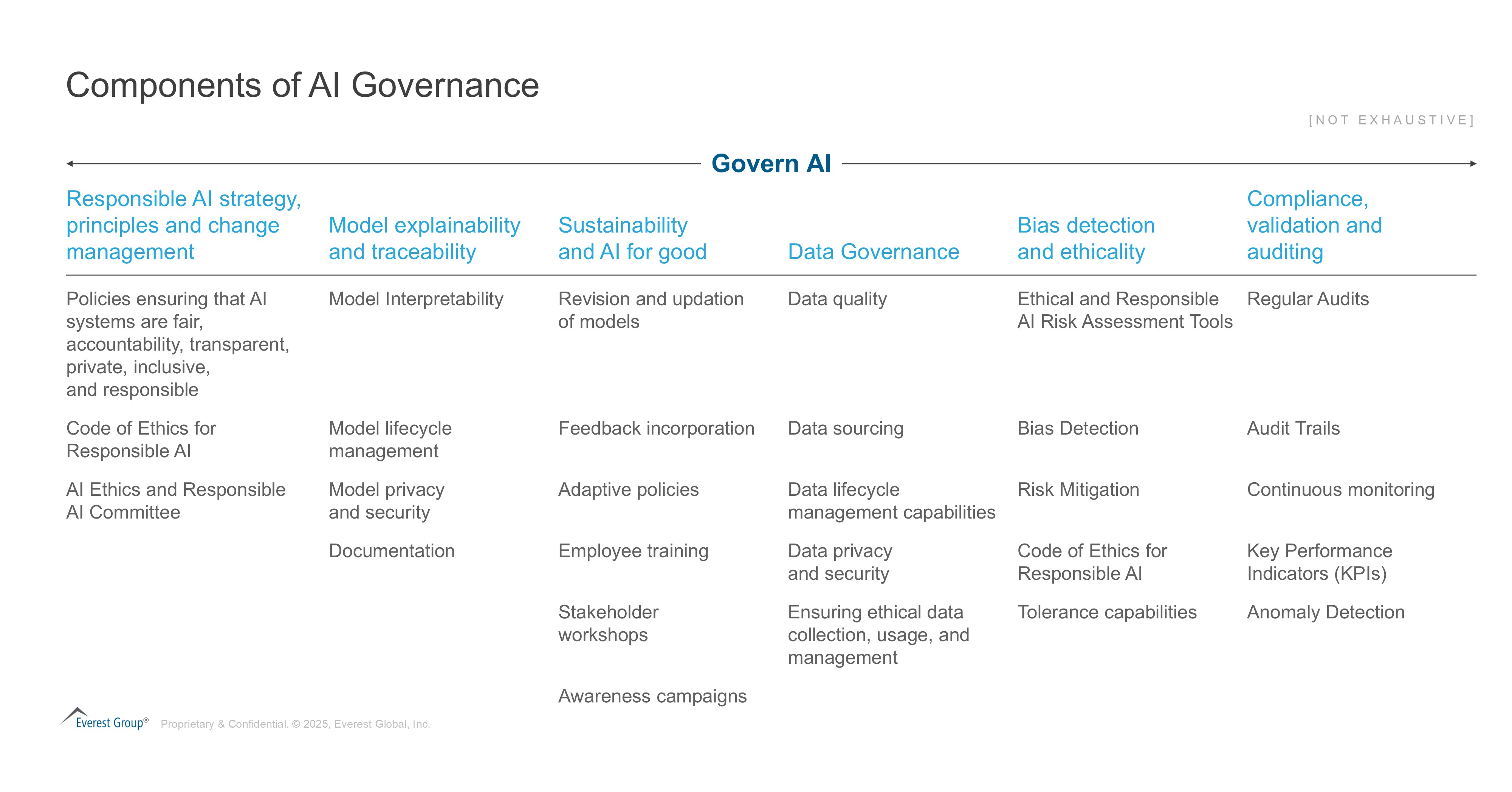

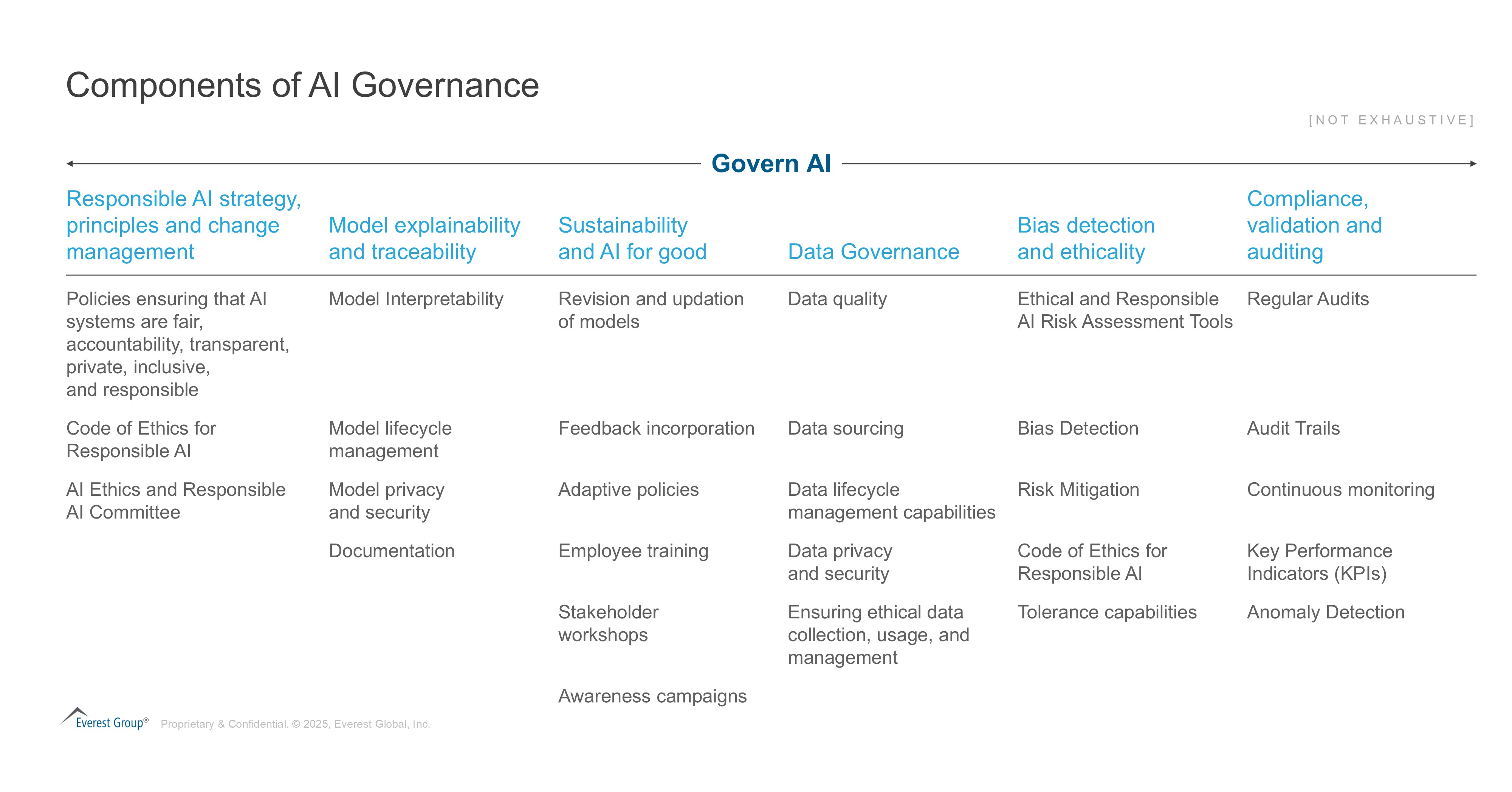

Exhibit 2 showcases the governance mechanism for AI in which the RAI strategy and principles inform the basic requirements and policies for further governance.

Service providers are becoming enablers for AI governance and RAI adoption

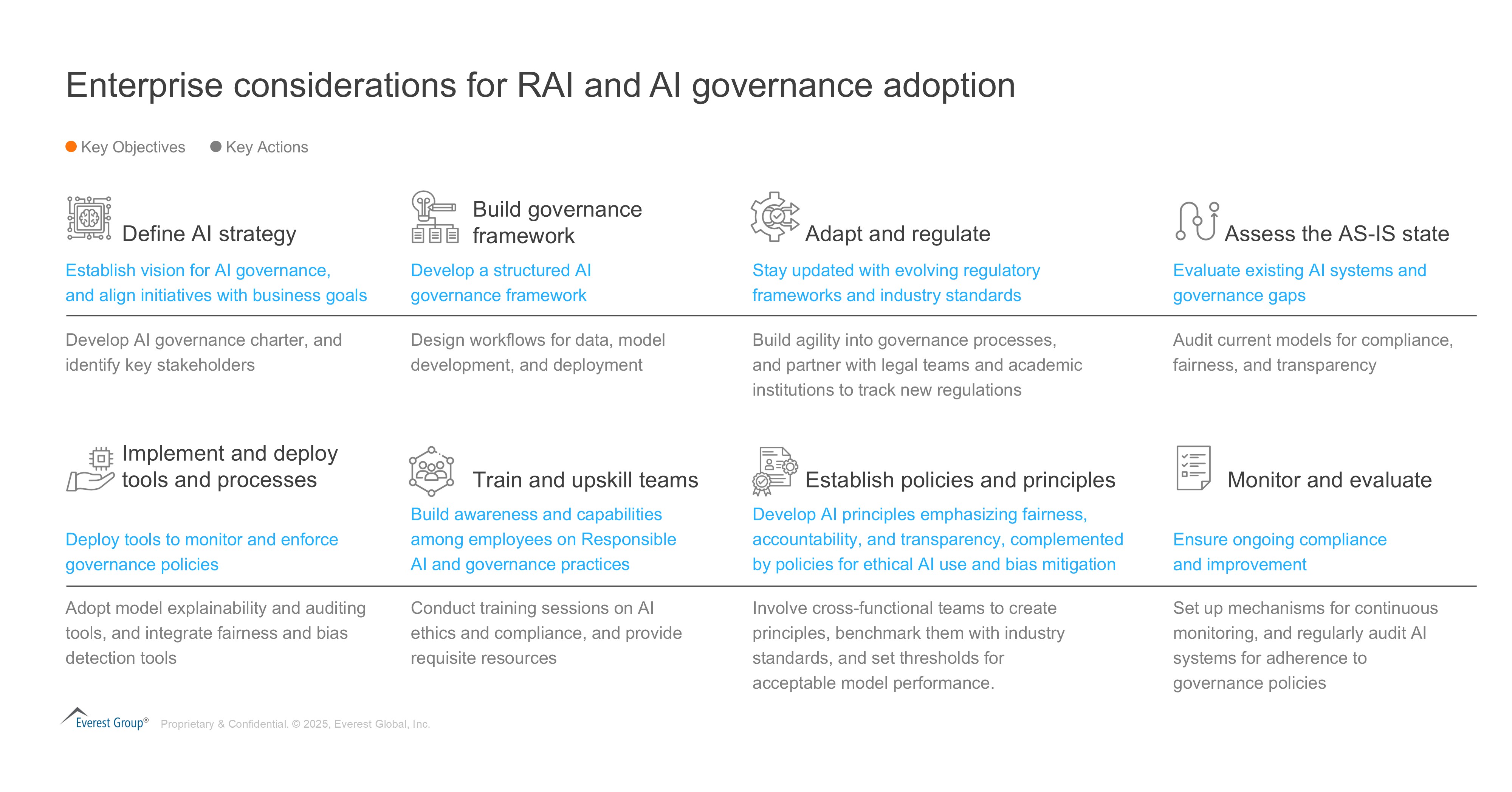

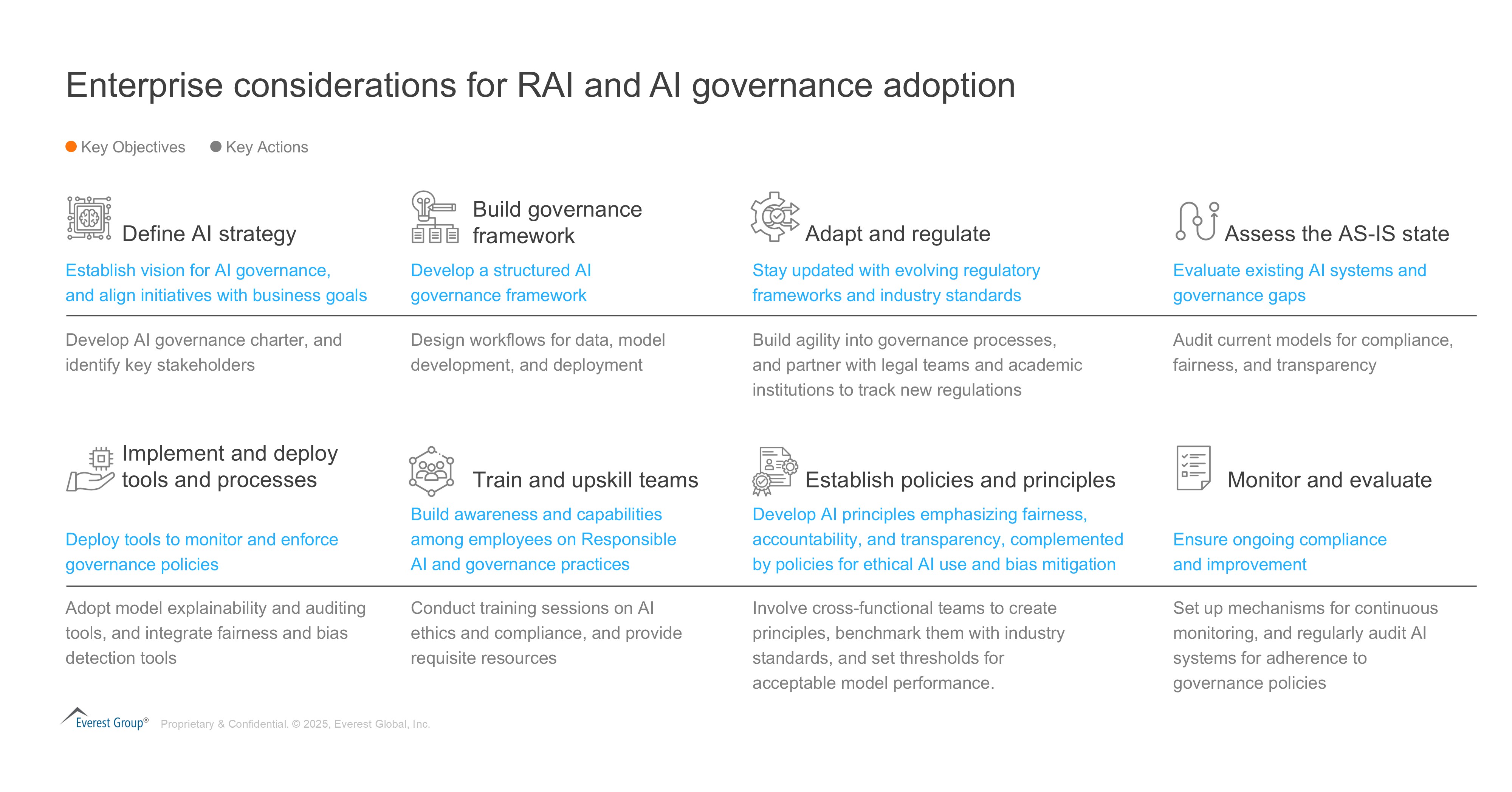

As enterprises look to evolve their AI governance with RAI principles, it is imperative that they draft a clear plan of action. Not only does this articulate the roadmap for AI governance, but also clarifies roles and responsibilities for driving adoption. Enterprises looking to adopt RAI should review Exhibit 3 which outlines key steps to take when embarking on their own RAI adoption journey.

Working with the right set of service providers can help enterprises effectively navigate the evolving AI governance landscape and boost AI governance at their organization. Service providers offer several tools, frameworks, and services tailored to organizational AI governance needs. These span AI audits, employee trainings, and tools and technologies that help manage governance efforts on an ongoing basis.

RAI and AI governance faces a bumpy road

Adopting RAI and AI governance, comes with its own set of challenges too. These span regulations, talent, and measurement issues. Below we highlight some of the most pressing concerns facing enterprises looking to evolve their AI governance.

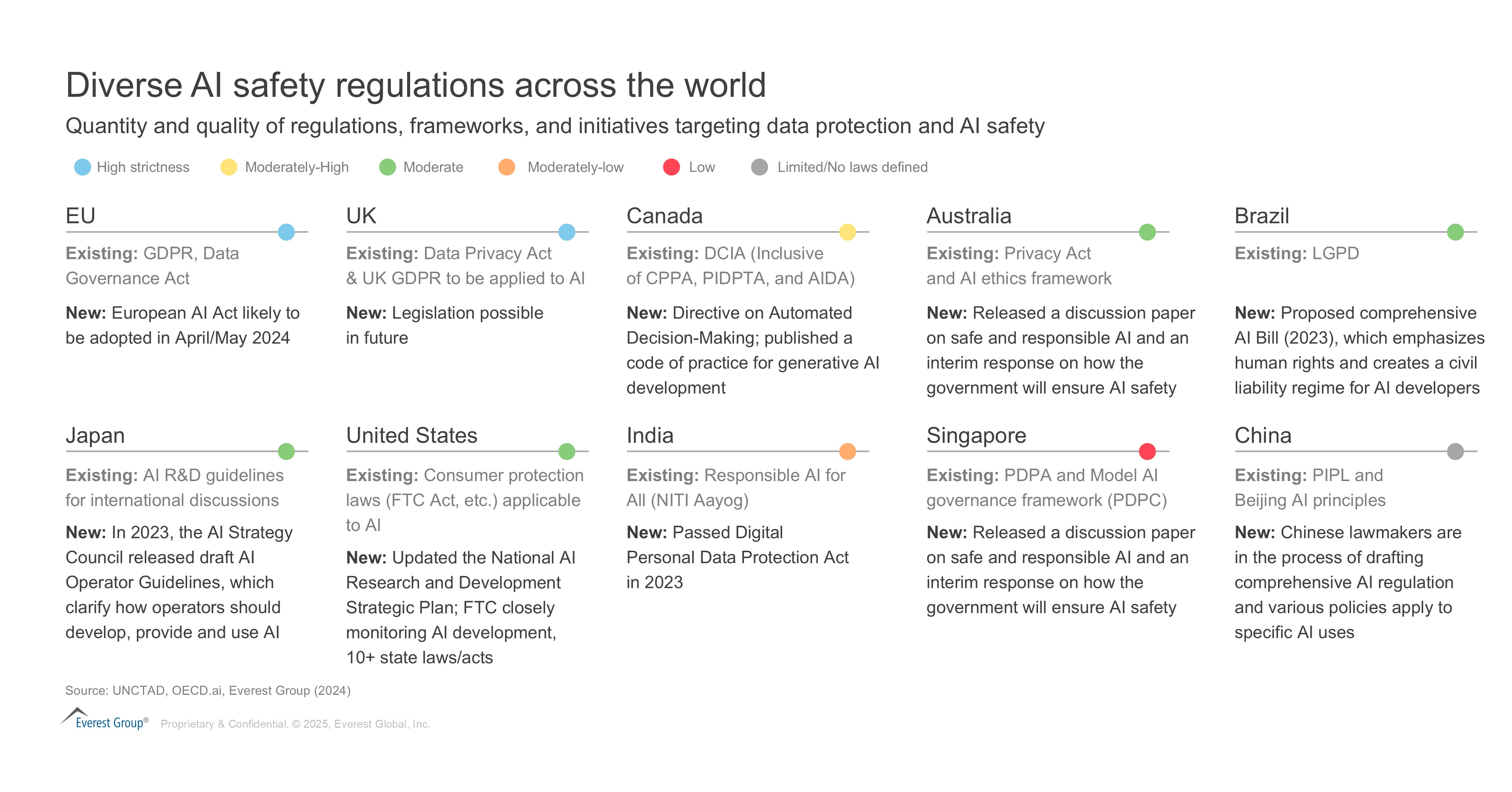

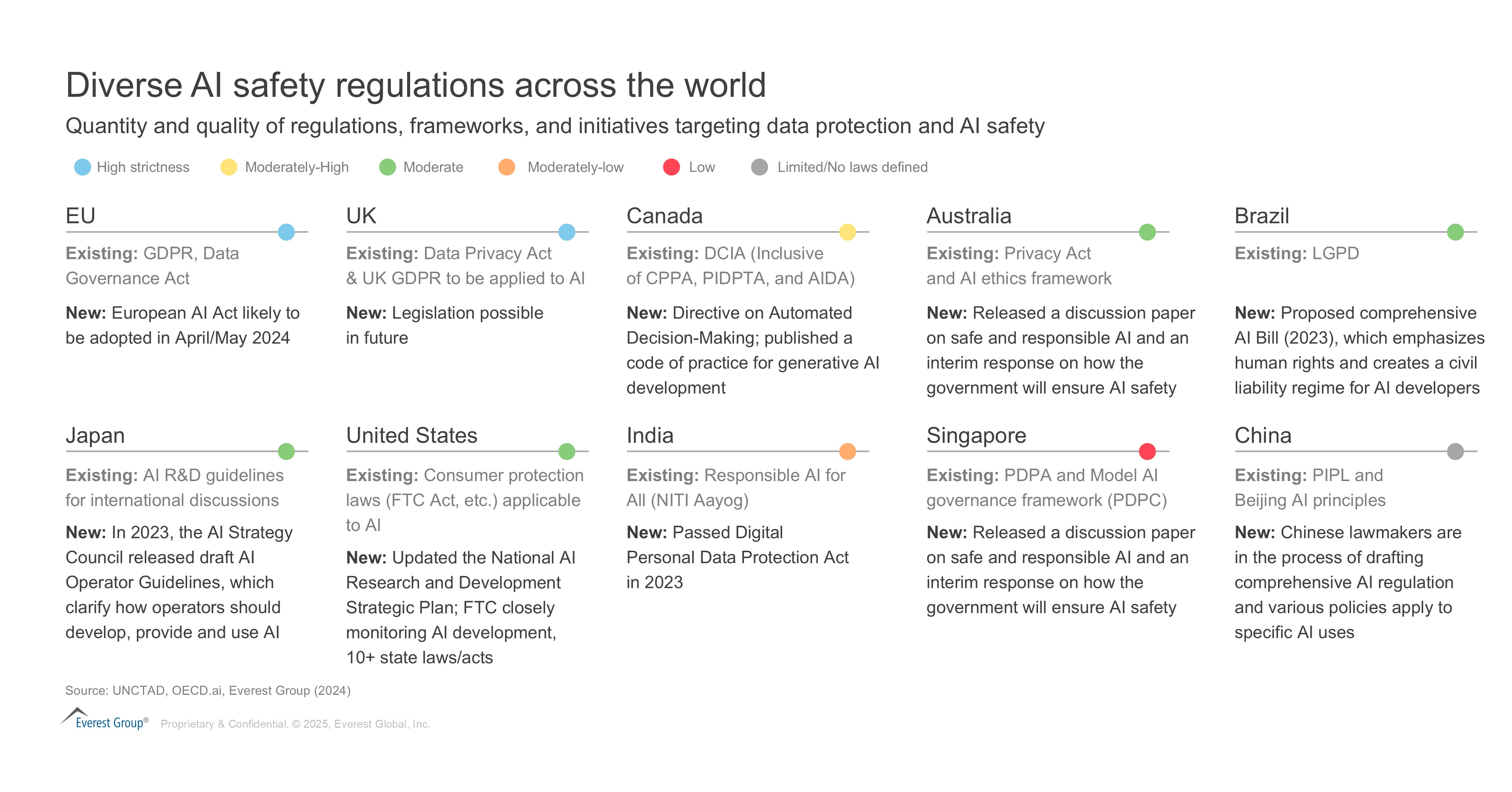

● Fragmented government policies: Countries around the world have their own set of AI governance policies and regulations. This regulatory fragmentation makes it difficult for companies operating globally to comply with different standards.

This results in increased inefficiencies, higher compliance costs, and stalls innovation, as companies struggle to navigate conflicting regulations.

Exhibit 4 list the diverse AI safety regulations across the world and their relative strictness.

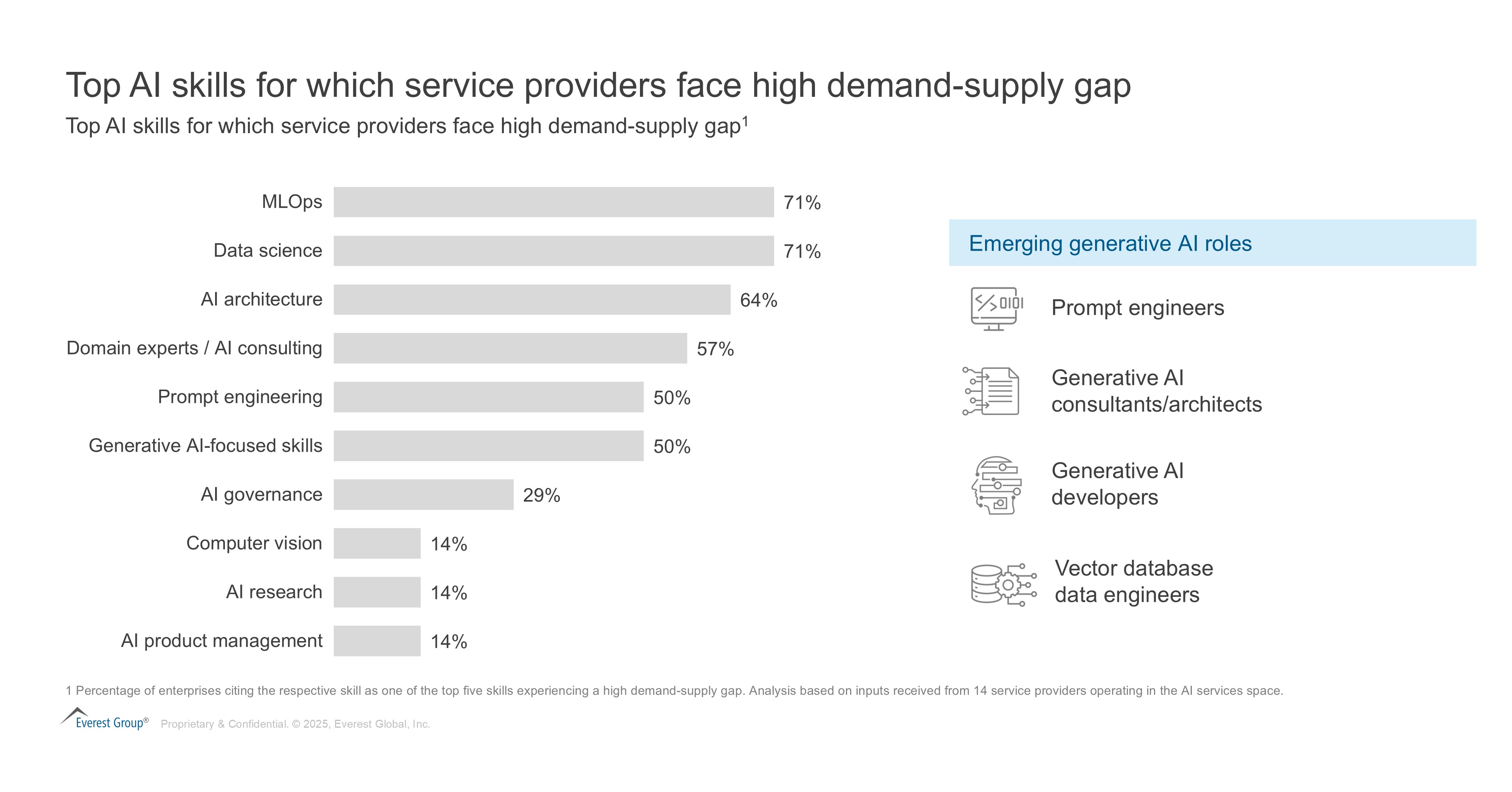

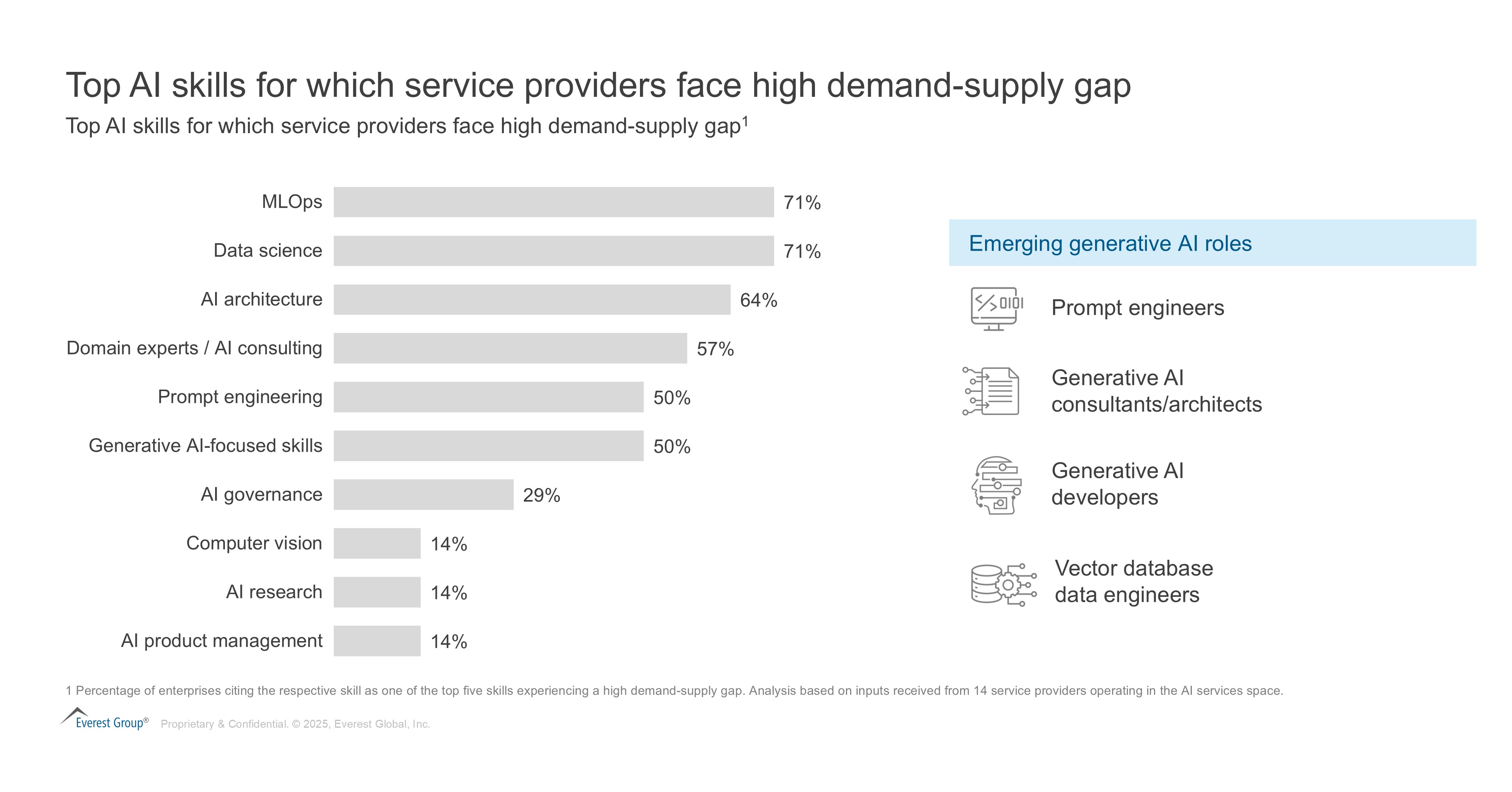

● Demand supply gaps in AI governance talent: The shortage of skilled professionals such as regulators, auditors, and AI ethicists, delays AI governance implementation, leaving systems unchecked and increasing risks of bias, privacy violations, and accountability lapses.

Exhibit 5 highlights the top AI skills which are facing demand supply gaps

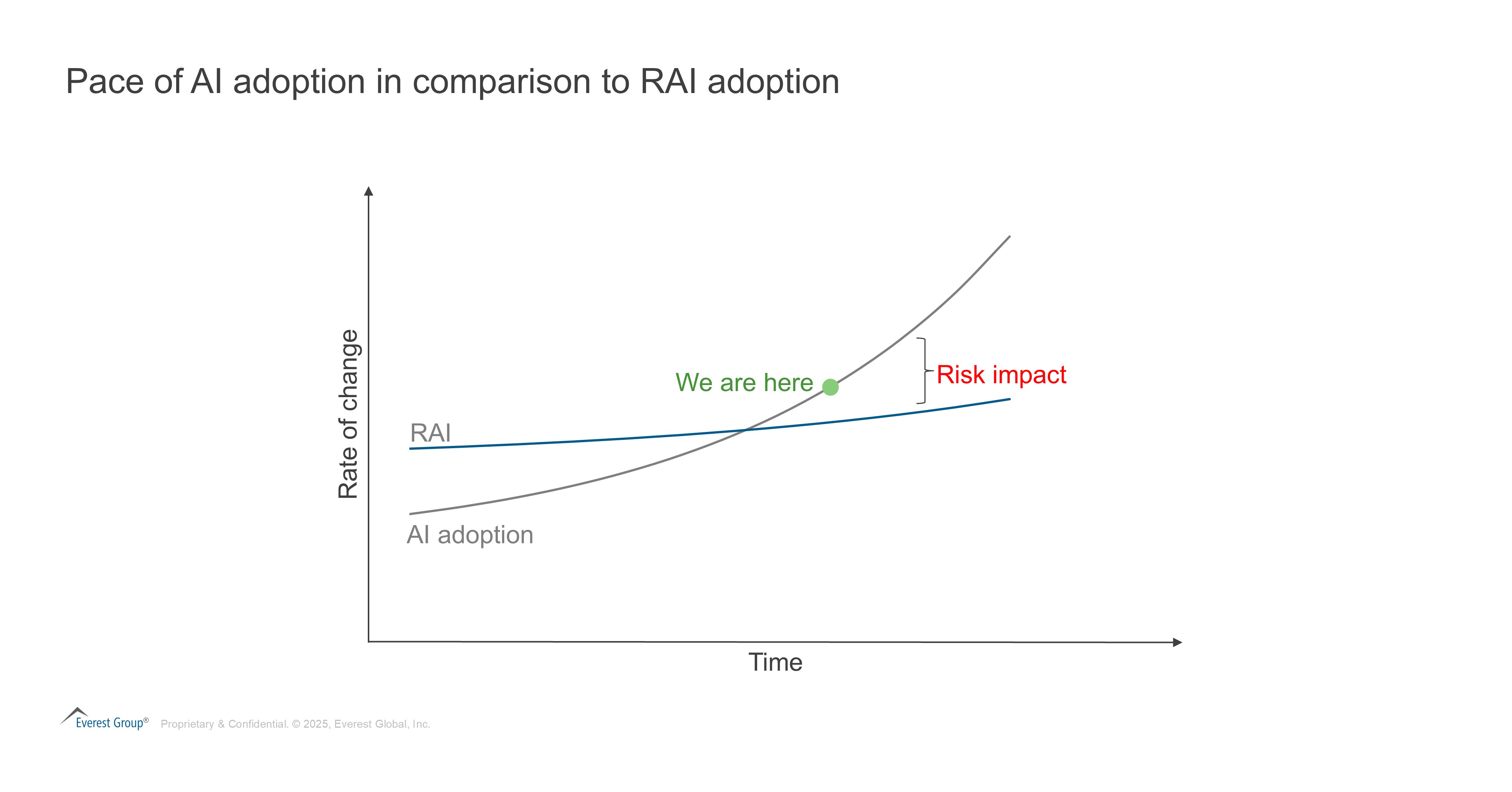

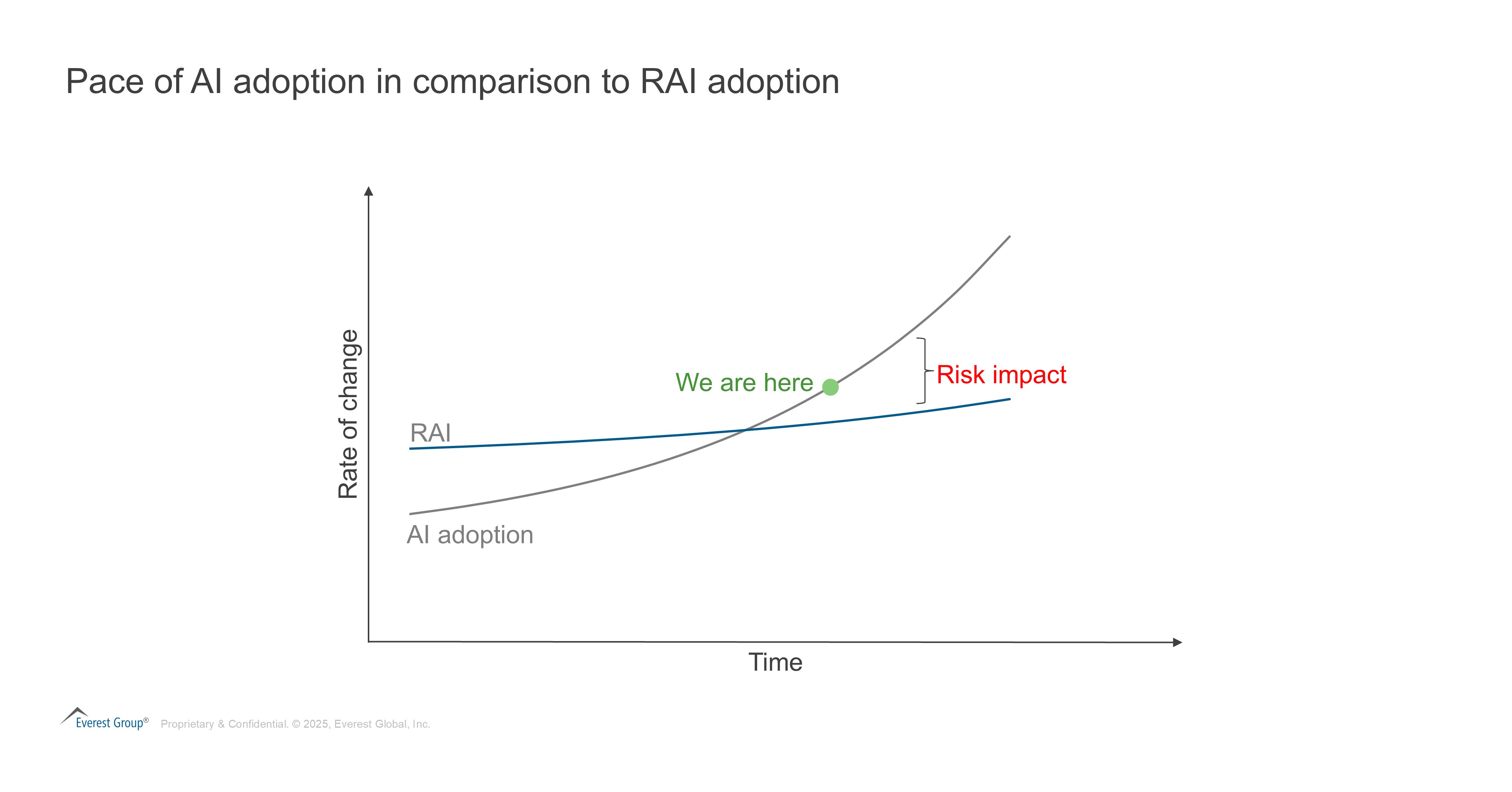

● AI growth outpaces policy formulation: AI adoption outpaces RAI implementation, creating governance gaps, as RAI policies fail to address new privacy and security risks. This lag can perpetuate biases, exacerbating ethical concerns like racial and gender disparities.

Exhibit 6 illustrates this growing challenge and highlights the need for more agile policy making.

● A lack of RAI metrics which make it difficult to measure their fulfilment or deficit: Soft metrics are vital for assessing ethical outcomes, accountability, and compliance in AI. The lack of clear RAI metrics undermines explainability, weakens governance, and increases ethical, legal, and reputational risks. For example, in sentiment analysis, unclear interpretations of terms like "like" and "overpriced" can erode stakeholder trust in AI's conclusions.

The AI governance landscape is evolving

The RAI space is rapidly evolving. Looking ahead we highlight below the trends that will shape the RAI landscape throughout the weeks, months and years to come:

● Rise of RAI Officers: Enterprises around the globe are appointing RAI officers to ensure AI systems are being deployed and governed in an ethical, fair, and transparent manner. RAI officers are typically responsible for ensuring compliance with regulatory requirements, conducting audits to assess AI fairness and accountability, and promoting responsible data usage. A notable example is that of Salesforce, which has established an AI ethics board to oversee fairness, accountability, and transparency in their AI models.

● RAI becomes part of employee training: Enterprises are enhancing AI governance readiness by implementing structured internal training programs and collaborating with educational institutions. They are also partnering with technology providers like Microsoft and Google to educate staff on fairness, bias mitigation, data privacy, and accountability in AI systems.

● Regulatory technology(RegTech) develops as a category: RegTech refers to technologies that helps organizations manage regulatory compliance. They do so by monitoring AI systems in real time, ensuring they meet evolving standards and detect risks like bias or ethical violations. RegTech solutions like TruNarrative and ThetaRay provide real-time monitoring and compliance for AI-driven processes. They ensure that AI models adhere to regulatory requirements, such as anti-money laundering (AML) laws and data privacy regulations like General Data Protection Regulation (GDPR).

● Human + AI workflows emerge: Organizations are increasingly adopting Human-in-the-Loop approaches which primarily involves humans reviewing or overseeing AI-driven decisions. A typical example from a modern-day scenario is IBM Watson for Oncology, which assists doctors by providing treatment recommendations based on medical literature, with oncologists then making the final treatment decisions, after reviewing the AI’s suggestions.

● AI governance emerges as a presssing remit for industry bodies: Global bodies like the International Organization for Standardization (ISO) and the IEEE have identified the need for broader AI regulation policies, and are working on frameworks and regulatory standards for AI which can be adopted across geographies. Initiatives like the Global Partnership on AI (GPAI) are also bringing together governments, industry leaders, and academia to discuss and promote common standards and guidelines for AI development and deployment.

As enterprises try to make sense of the rapidly changing AI landscape, RAI efforts cannot be a one-time investment. Organizations that will differentiate themselves will be those that are able to include AI governance into their everyday way of working, making it second nature for their employees and leadership.

While the road ahead is challenging, it offers a new source of competitive advantage for those organizations that get it right.

Mphasis is pleased to share this blog from Everest Group authors Abhishek Sengupta, Practice Director and

Karthikey Kaushal, Senior Analyst